3 min read

Don’t Fall for the “Deepfake”

Follow these 4 tips to avoid this tricky AI-enhanced scam. Are you familiar with the term “deepfake”? No, it’s not some schoolyard football play...

6 min read

Ryan Pleggenkuhle

|

October 18, 2023

Ryan Pleggenkuhle

|

October 18, 2023

“This is going to change everything.”

These are the words Chris Porter declared to his students when ChatGPT by OpenAI rolled out in November of 2022.

Porter, Drake University’s Artificial Intelligence program director, has been teaching AI concepts since the program was commissioned in 2019.

While AI was “definitely booming” before 2019, the advent of generative AI, specifically ChatGPT, has quickly transformed the landscape of content creation.

“It (ChatGPT) was a point of entry into the professional world that we’ve never seen before,” Porter said. “And now, employers know they need to educate their workers to be up to speed on this stuff (generative AI).”

Speaking of employers, how do business decision-makers feel about AI and generative AI in the workplace?

A LinkedIn article included in Porter’s eNewsletter (“Innovation Profs”) in August detailed the feelings of U.S. executives:

For clarity, you should know the distinction between AI and generative AI.

AI is the theory and development of computer systems able to perform tasks that normally require human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages. (It’s been around since the 1950s!)

You’ve likely used AI in your everyday life for years. Consider these examples:

All are powered by AI!

Generative AI is the use of AI tools to produce audio, text, images, video, code, and so on.

Generative AI is connected with Large Language Models (LLMs), a type of AI algorithm that can mimic human intelligence, specifically designed to help generate text-based content (e.g., ChatGPT by OpenAI is an LLM).

LLMs track context, which allows them to produce on-topic, human-like text in response to the prompts they receive.

1. Let generative AI handle the boring stuff.

“I think that any business is going to have some aspect of their operation involve producing documents that have boilerplate language, or are performing tasks that are pretty routine and repetitive with some slight variation,” Porter said.

Some practical examples could include creating copy for:

2. Supercharge your ideation and brainstorming process.

“You think about the marketing side, there’s always going to be work to do — at the early stages, ideation, and at the later stages, creating content for blog posts, social media posts, that sort of thing,” Porter said. “ChatGPT is really good at ideation. You can ask it, ‘Give me some ideas to do X, Y, and Z,’ and it will spit out any number that you ask.”

Porter added that from there, it’s up to you, the inputter, to vet the outputs. And as a financial institution, you must emphasize the importance of human oversight when leveraging generative AI.

“Maybe half of the ideas are worthless or just ineffective,” Porter said. “That’s where you, the expert in the room, can decide if it’s good or bad.”

Examples of generative AI use in the content creation process could include:

3. Play the game of pretend with role prompting.

This may seem obvious, but it’s worth mentioning — the more detail you provide an LLM (known as “prompting,” the process of instructing an AI to perform a task), the better output you’ll get.

Take it a step further, and you get “role prompting” — a technique that can be used to control the style of generated AI.

For example, let’s say you’re looking to hire a new credit analyst and would like ChatGPT to help you write a job description. You can type into the chat box: “Act as a copywriter. Write a job description for a credit analyst.”

ChatGPT is capable of writing copy that outlines the job description — from there, you’d just need to add your institution’s specifics, such as the location, your institution’s name, an “About Us” section, benefits, etc. — and the details of the job such as an overview, responsibilities, qualifications, plus how to apply.

“Even when it came out, I don't think people expected that there's going to be a skill to prompting,” Porter said. “But you can make it perform better by telling it how to act.”

You can even ask it to give you a tone (e.g., friendly, serious, or funny).

While there’s certainly trepidation regarding the use of generative AI in the workplace, there’s no reason to dismiss it.

“To say, ‘We wouldn’t use AI,’ is to say, ‘Yeah, we’re going to look to restrict the possibilities that we might consider,’” Porter said. “An acknowledgment of using AI should be positive, because now we can offer more possibilities.”

Of course, that doesn’t mean to proceed without caution.

Here are the top concerns regarding the use of generative AI:

1. Like a poker shark, know when to call AI’s bluff.

“ChatGPT’s interface looks a lot like a search engine, but if you think that it is a search engine, then you’re going to be both disappointed and misinformed,” Porter said. “Search engines are supposed to give you pointers to other information out in the world. ChatGPT wasn’t trained to be, in a sense, connected to the world in that way.

“It was trained to be internally coherent with respect to language, but it’s not necessarily attuned to the facts.”

Porter gave the example of the lawyer who used ChatGPT to write a legal brief that required an accurate citation of cases — it didn’t work. It was filled with “hallucinations” (inaccuracies, made-up quotes, etc.), thus landing the lawyer in some hot water (Google it).

In another example, Porter attempted to have CLAUDE and BARD (two LLMs similar to ChatGPT) summarize an article in which a judge ruled that AI-generated art is not eligible for copyright.

“I was curious if it could summarize an article for me, and it made stuff up,” Porter said. “One of the two said that the judge was from Colorado, but I know the judge was from the District of Columbia. “It added to the story in ways that were not correct.”

This leads us to our next and perhaps most important point …

2. This exam isn’t “true-false” — it’s based on style points.

“You have to watch out for those tasks that require accuracy,” Porter said. “It requires a lot of accountability and vetting.

“There are tasks that don’t require accuracy because the aim isn’t to get something right or wrong; it’s whether or not something could be effective or not effective.”

An example Porter gave was to consider an advertising pitch.

“We don’t judge a pitch as to whether it’s true or false, but whether or not it speaks to a person,” Porter said. “If you say, ‘Give me 30 pitches for a given product,’ you don’t go through and say ‘True-False-True’ ... You get to one and say, ‘That’s a bad one’ — or — ‘I like this one.’”

3. Lost in AI copyright limbo? Consider creating your own “humanness” policy.

“If AI-generated art can’t be subjected to copyright, which is a really new development, we have to think about the intellectual property landscape and where this is going to lie in that landscape,” Porter said. “In the space of AI-generated content, maybe there’s enough ‘humanness’ involved with skill and expertise that we say, ‘Yeah, that’s something that’s proprietary that merits some protection,’ but we haven’t gotten there yet.

“So, while we’re in limbo right now, we just need to watch our backs a little bit and be careful.”

Porter added that the terms and policies for OpenAI (ChatGPT’s research and deployment company) are “pretty transparent.”

“If you abide by those, then it seems that’s where the policy is where things stand right now,” Porter said. “We’ll see if those stay put or if there’s enough of a shift in the legal landscape of AI. That’ll be something worth watching for.”

Porter added that organizations will want to consider adding their own AI policy, though industry guidelines are still a work in progress.

“I think there's going be a demand for companies to come up with an AI policy,” Porter said. “We don't even have a framework for developing an AI policy for a company. But that's something that I think is going to be developed over time.”

Closing Point: Don’t stand still.

“I do think that just standing pat is going to be a mistake because other companies are going to look for a way to separate themselves, and so generative AI certainly promises to be a separator in the same way that data analytics was a separator earlier,” Porter said. “Now, companies would be foolish if they didn’t have a data analytics department operation in some capacity.

“I think the mentality should be that this is really exciting. I would hope that at the executive level, people are saying, ‘Oh, we can do more now with this.’”

*About Chris Porter: Chris Porter is the Director, Artificial Intelligence Program at Drake University in Des Moines, IA. The AI program was commissioned to start in 2019, and Porter has been teaching AI concepts since. He holds a bachelor’s degree in mathematics, coupled with a minor in Philosophy from Gonzaga University. He earned a joint Ph. D. in both disciplines from the University of Notre Dame. Porter, along with fellow Drake professor Chris Snider, publish a weekly generative AI newsletter called “Innovation Profs” — email innovationprofs@mail.beehiiv.com to subscribe.

3 min read

Follow these 4 tips to avoid this tricky AI-enhanced scam. Are you familiar with the term “deepfake”? No, it’s not some schoolyard football play...

3 min read

Connect with consumers in new ways with these generative AI audio and visual tools. "To me, the future is that we are all using these tools, and...

3 min read

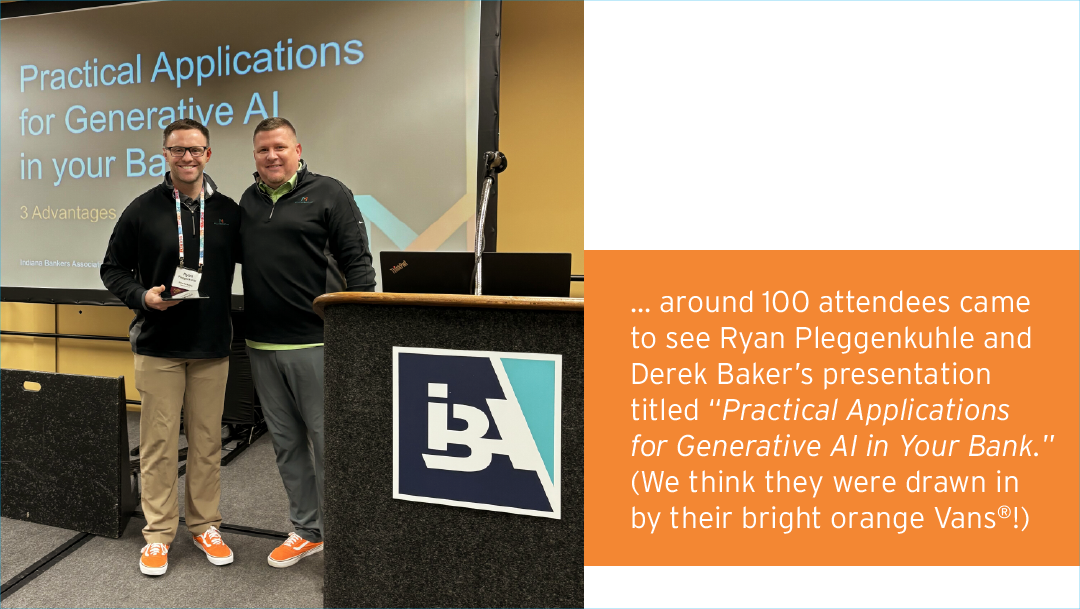

Generative AI Emerges as Major Theme at #IBAMega This Year. Earlier this month, Derek Baker and I had the opportunity to attend and present at the...